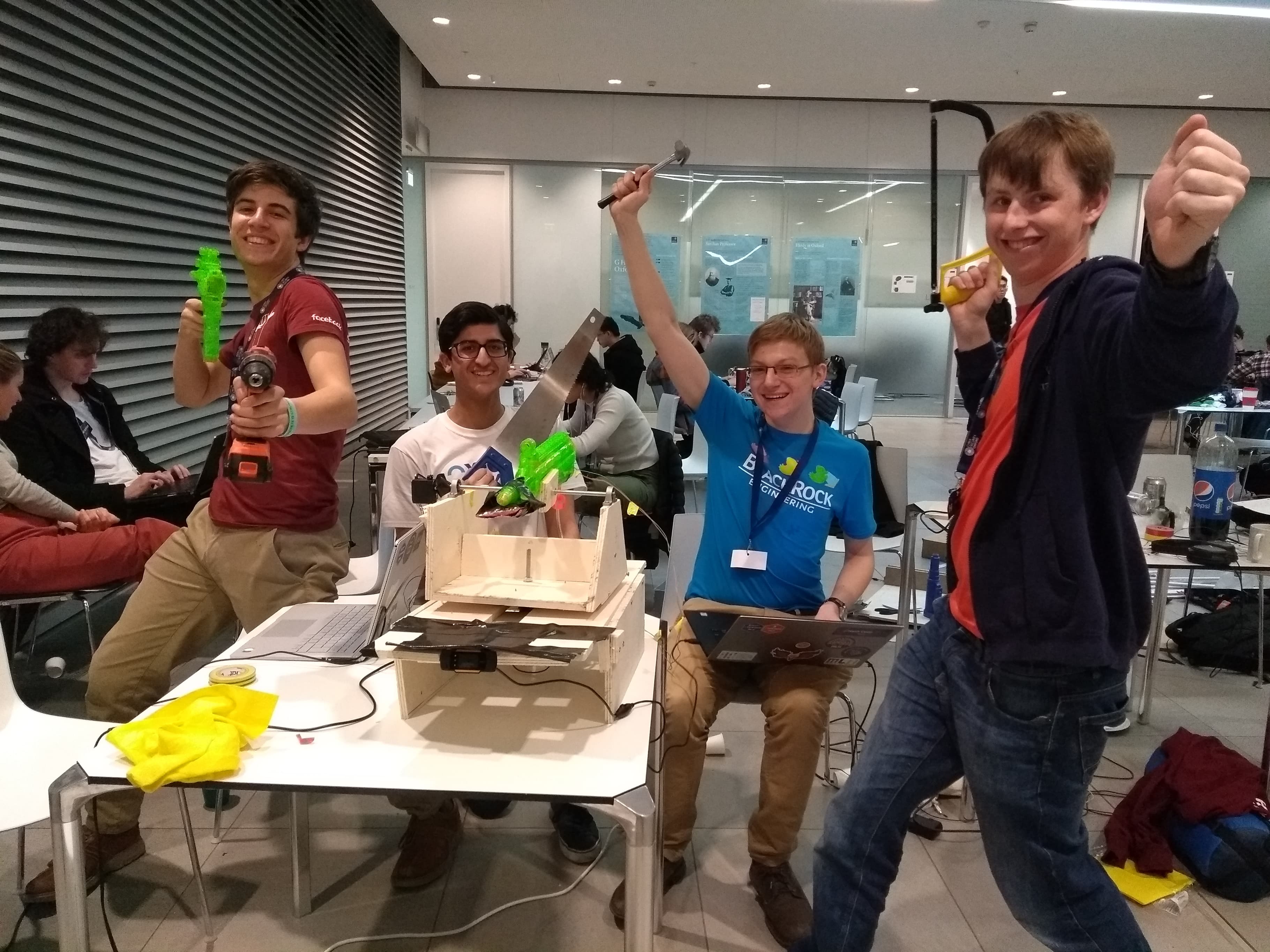

My hackathon team celebrating our latest accomplishment

My hackathon team celebrating our latest accomplishment

Over the last couple of years, my team and I have realised that hardware hacks are usually the most rewarding hackathon projects - most of us are in our element in the world of software, but we love the challenge of working in unfamiliar territory, and there’s just something inherently satisfying about finishing work on a piece of hardware.

This time around we wanted to stay in the space of drinks (see my post about our last Oxford Hack project, IoTea) but we still wanted to try something a little different. After a short brainstorming session we came up with the perfect idea - a machine that delivers a drink to its users by launching it into their face! The project was the perfect scale for a hackathon: low budget, easy to split into parts, and completely impractical! Having collected the supplies we needed (electric water pistol, raspberry pi zero, a couple of servos and some scrap wood) we set to work.

Hardware

I wasn’t heavily involved in the hardware side of our project, but it involved building a wooden box to hold the turret, setting up two servos to be controlled from the pi using PWM, and sawing the trigger off our water pistol so we could replace it with a relay circuit - we wanted to be able to trigger it at will using the pi.

We mounted our USB webcam on the front of the box, attached so it would remain stationary as the turret rotated, making the targeting code a lot simpler.

Targeting

The targeting code made fairly heavy use of Microsoft Azure’s Face API, with some interesting calculations on top. The API is really nicely written and can be accessed using a simple HTTP POST request, returning JSON with parameters of all the faces it can see in the image sent to it. The one downside is the delay - it took approximately one second to make the request and receive the response, so victims users of our creation had to stay still while it targeted.

We used OpenCV to take a picture and encode it as text, then thanks to the magic of python the code to make our requests was super simple:

def request_face(self, body):

try:

conn = http.client.HTTPSConnection(self.face_url)

conn.request("POST", "/face/v1.0/detect?%s" % self.face_params, body, self.face_headers)

response = conn.getresponse()

data = json.loads(response.read())

conn.close()

return data

except Exception as e:

print("[Errno {0}] {1}".format(e.errno, e.strerror))To figure out how far away a person was from the camera, we needed something that’s relatively consistent between different people. Thankfully such a metric exists - the interpupillary distance (IPD). Surveys have shown that the average human IPD is approximately 63mm, with a fairly small standard deviation. Perfect! Now all we need is the FOV of the camera and the distance between the camera and the turret (both easy to measure), as well as the velocity of the liquid exiting the turret, and we can use some maths to calculate what angle our turret needs to be at!

# Given x, y, width, height, ipd, return (yaw, pitch)

def get_yaw_pitch(self, x, y, width, height, ipd):

average_ipd = 0.0629

angular_separation = (ipd / float(width)) * self.theta_x

distance = average_ipd / (2 * math.tan(angular_separation / 2))

yaw = ((float(x)/float(width)) * 2) - 1 # value between -1 and 1, where the far edges are the edge of the FOV

v_height = 2 * distance * math.tan(self.theta_y / 2)

v_distance = ((float(y)/float(height)) * v_height * (-1.)) - (v_height / 2)

(x_relative, y_relative) = (distance + self.offset_x, v_distance - self.offset_y)

pitch = math.atan(y_relative/x_relative)Now all that needed doing was sending the yaw and pitch to the servos using PWM. To calibrate them you need to check out the data sheet that came with them, these are just the parameters for our specific motors. Thanks to python’s built-in modules, setting them up was very straightforward.

We all had a lot of fun at the hackathon, and the end results speak for themselves: